Web Crawling - Importance and Benefits for Your Business

In our previous discussion, we talked about Crawling, Indexing, and Ranking and explained how the Google Search Engine works. It was part of the main series: SEO Essenstials.

In this blog, we will do an in-depth discussion about web crawling and give you everything you need to know about the topic to equip you with the necessary information to help you in the long run.

We’ll try to break it down and not make it as complicated as possible.

Ready?

Let’s go.

What is Web Crawling?

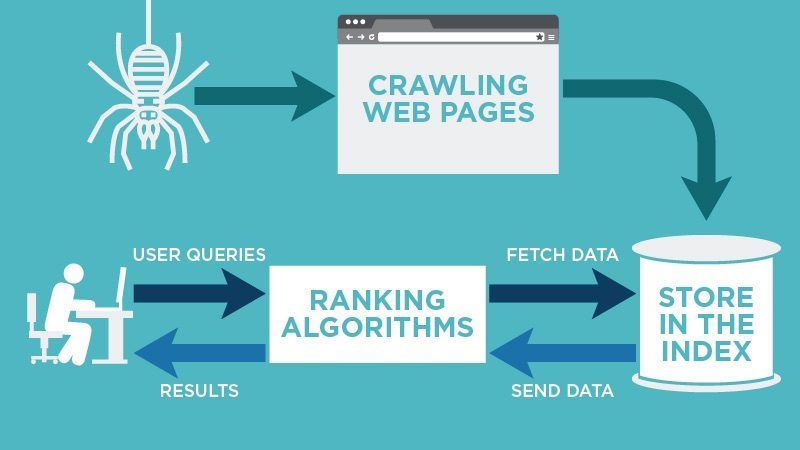

Crawling generally refers to the process used to collect and index the information contained on websites and web pages.

It differs from web scraping in that web crawling collects the URLs and links used for web scraping. And without web crawling, data extraction would be random, unorganised, and completely ineffective.

Image Source: neilpatel.com/blog/deindex-your-pages/

What is a Web Crawler?

A web crawler, spider, or search engine bot retrieves and indexes information from various online locations. The purpose of such a bot is to learn about every webpage on the internet so that the information can be obtained when needed and to guarantee that this information is indexed and updated when a user does a search query.

Search engines nearly usually employ these bots. Search engines can deliver relevant links to user search queries by applying a search algorithm to the data collected by web crawlers, providing the list of webpages that appear after a user searches into Google, Bing, or any other search engine.

Why Are Web Crawlers Called 'Spiders'?

The Internet, or at least the part that most users access, is also known as the World Wide Web – in fact, that’s where the “www” part of most website URLs comes from. It was only natural to call search engine bots “spiders” because they crawl all over the Web, just as real spiders crawl on spiderwebs.

Classes of Web Crawlers

There has been more advancement in how these tools are developed, and currently, we have 2 different classes of web crawlers.

1. Browser-based

These classes only function as extensions within any browser They may also be APIs-based and only connect with programs that support this feature. However, they are limiting in many ways because they are not easy to customise or scale up and can only collect what the central server allows.

2. Self-built / Ready-to-use

These are more encompassing and can handle any platform or website. They can also be easily customised to serve different needs and scaled up or integrated to work with other necessary tools, such as proxies. These types may be more expensive and require higher maintenance. They also require particular technical know-how to be built or operated successfully.

Types of Web Crawlers

Web crawlers are further classified into four types based on how they operate.

1. Focused

To deliver more localised web material, a focused crawler finds, indexes, and downloads only web content that is relevant to a specific topic. A conventional web crawler follows each hyperlink on a web page. Focused web crawlers, instead of ordinary web crawlers, search for and index the most relevant connections while discarding irrelevant ones.

2. Incremental

When a web crawler indexes and crawls a web page, it returns to the URLs and refreshes its collection regularly to replace out-of-date links with new URLs. Incremental crawling refers to the process of revisiting URLs and recrawling old URLs. Recrawling pages aids in the reduction of inconsistencies in downloaded materials.

3. Distributed

To divide web crawling processes, many crawlers are running on various domains simultaneously.

Many machines use a distributed computing strategy to index the internet. These technologies may allow people to provide processing and bandwidth resources to crawl web pages freely. Costs associated with operating significant computing clusters are reduced by distributing the burden of these jobs over numerous processors.

4. Parallel

A parallel crawler runs numerous processes at the same time.

It seeks to maximise download speed while avoiding multiple downloads of the same page. Because two separate crawling processes might identify the same URL, the crawling system requires a strategy for allocating the new URLs discovered throughout the crawling process to prevent downloading the same page more than once.

The Importance of Web Crawling

It is estimated that 73% of all data on the internet goes unused and unanalysed. This means that only a tiny percentage of all generated data is used. Companies should improve their data collection methods since data has shown to be a crucial component in our fast-paced digital society.

Data helps organisations to make sound decisions, develop insights, and gather knowledge that may help them expand. Finding and collecting data is extremely difficult; businesses must understand how to crawl a website without being restricted.

Web crawlers are becoming increasingly important, mainly because there aren’t many alternatives. The tool performs an excellent job of completing all of its functions on time, whether indexing web pages or protecting the brand from harm.

4 Common Uses of Crawlers

1. Brand Protection

Fraud, counterfeiting, identity theft, and reputational damages can all be avoided when the right data is collected regularly.

To ensure that the image is protected on all fronts on the internet, brands use crawlers to continually collect a tiny bit of information that affects the company’s name, assets, and reputation.

2. Research

Crawling the web is essential for performing market research. This study is necessary for business owners to understand what they are doing.

Before a brand can enter a new market or create a new product, it must conduct appropriate research to determine whether or not to do so. For this study, web crawlers are employed to collect information from various corners of the industry.

3. indexing

The internet is a vast universe with billions of web pages. Despite this, internet users may find what they are seeking in a matter of seconds. This helps web crawlers scan the web for similar content and hyperlinks and then categorise them to make it simpler to discover responses to user searches.

4. E-Commerce

E-Commerce is the business of selling goods and services through the internet. It is a booming and profitable business, but it is also simple for companies to make mistakes when they do not depend on data.

Crawlers may be used to collect data such as product availability and pricing to make digital commerce more progressive and help a company’s brand flourish.

Bonus: Best Practices for Web Crawling

The following are essential tips on how to crawl your website that would help you avoid getting blocked and enable you to get the data you need quickly and efficiently.

1. Check Robots.txt Protocol

Most websites have rules and regulations guiding crawling and scraping within the Robots.txt file. By checking and confirming, you can know whether or not a website can be scraped and what to do to avoid getting blocked.

Before crawling any website, ensure your target website supports data collection from its page. Examine the robots exclusion protocol (robots.txt) file and follow the website’s guidelines.

Even if the web page supports crawling, be courteous and avoid causing damage to the website. Follow the robots exclusion protocol’s criteria, crawl during off-peak hours, restrict queries to one IP address, and put a delay between them.

2. Use a Proxy Service

Proxies are the go-to tools for avoiding blocking on the internet. They are usually equipped with a large pool of IPs and locations, which you can choose from to prevent any issues.

Select a proxy service with a vast pool of IPs and a diverse range of locales.

Without proxies, web crawling would be impossible. Depending on your work, select a reputable proxy service provider and pick between the data centre and residential IP proxies.

Using a third-party intermediary between your device and the target website removes IP address blockages, ensures anonymity, and enables access to websites that may be unavailable in your location.

For example, if you live in Australia, you may need to utilise an NZ proxy to access online material in New Zealand.

3. Rotate IP Addresses

IP addresses should be rotated.

It is critical to rotate your IP addresses while utilising a proxy pool.

If you make too many requests from the same IP address, the target website will quickly recognise you as a danger and ban your IP address. Proxy rotation makes it look as if there are several internet users, lowering your chances of being blocked.

4. Avoid Honeypot Traps

Honeypots are links embedded with HTML code and appear like the real deal, but clicking on them can induce immediate blocking. This often happens because they are invisible to the organic user but are visible to crawling bots. Once the bot clicks it, it gives itself away as software and automatically gets blocked.

5. Change Patterns

Always keep the crawling pattern changing.

The pattern describes how your crawler is set up to traverse the website. It’s only a matter of time before you become blocked if you keep using the same basic crawling routine.

Add random clicks, scrolls, and mouse motions to make your crawling appear less predictable. The behaviour, however, should not be wholly random. When building a crawling pattern, one of the best practices is to imagine how a real user would explore the website and then apply those concepts to the tool.

Conclusion

Gathering publically available data is essential for corporate success, but no one can claim that it is without obstacles. Web crawling is critical for data collection. E-commerce enterprises use crawlers to obtain new data from numerous websites. This data is then utilised to enhance company and marketing initiatives.

Working with a professional team of SEO experts in your area can help boost your brand growth and ensure you are given the support you need.

What's Next on Netbloom

In our next blog post, we will focus more on Indexing and give tips to ensure that your website is doing the work it’s supposed to do for your brand. We hope you learned something today. We’ll see you again.